3D Generation Closes the Physical Gap! While we are still amazed by Midjourney's stunning artworks and Sora's movie-level videos, a more fundamental issue is quietly emerging: why do these AI-generated 3D worlds always feel "plastic-like"?

The answer is simple: they lack a physical soul. Every object in the real world follows strict physical laws—chairs have weight and hardness, laptop screens can be opened, and materials determine touch and heat dissipation. However, existing AI-generated 3D models only care about whether the appearance is realistic, completely ignoring these crucial physical properties. This flaw immediately becomes evident in hard-core application scenarios such as physical simulation, robotic grasping, or embodied intelligence.

Scientists from Nanyang Technological University and Shanghai AI Lab have recognized this core issue. Their PhysX-3D project is bringing a disruptive transformation to the entire 3D generation field. The project's goal is clear and ambitious: to break the "virtual spell" of 3D generation, enabling AI to create truly "down-to-earth" 3D worlds with a physical soul.

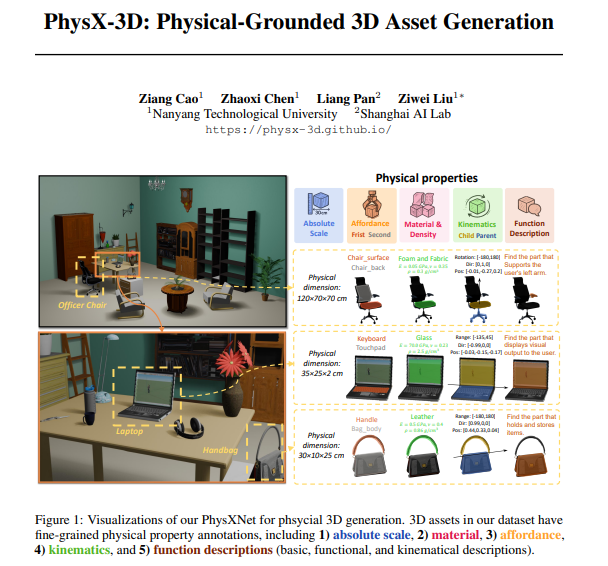

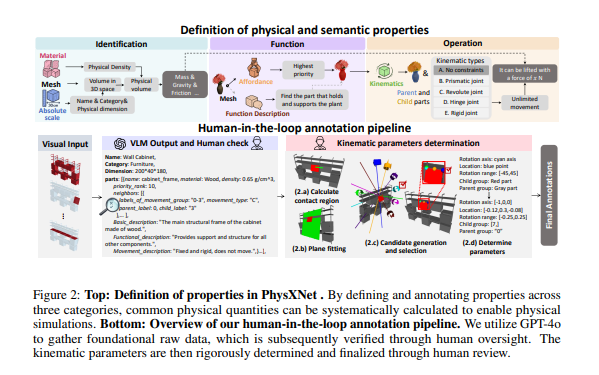

The PhysX-3D team proposed the "Five Core Questions" for 3D models, which form the foundational architecture of a realistic 3D world. The first is absolute size—AI must accurately determine whether the generated object is an 1.8-meter wardrobe or an 18-centimeter model. The second is material properties—the system needs to understand whether the object is made of glass, metal, or sponge, which determines density, hardness, elasticity, and other physical parameters.

The dimension of functional affordance requires AI to understand the core function and the most commonly touched parts of an object. For example, the main function of a chair is "sitting," so the seat and backrest are the most important interactive areas. Kinematic characteristics involve the motion capabilities of an object, including which parts can move, how they move, the range of motion, and the parent-child relationships between components. Finally, the functional description requires AI to explain the purpose and functionality of the object using natural language.

Facing the challenge of a lack of comprehensive physically annotated datasets in the market, the research team demonstrated a romantic spirit of engineers: if there was no suitable "textbook," then they would create one themselves. Thus, PhysXNet was born—a global first 3D dataset that systematically annotates five physical dimensions, containing over 26,000 finely annotated 3D objects. The extended version, PhysXNet-XL, even includes over 6 million physically annotated 3D models.

The construction of the dataset used a clever "human-machine collaboration" annotation pipeline. First, AI systems like GPT-4o performed initial automated annotations, followed by human experts for review and refinement. For the most complex kinematic parameters, the team designed a precise process, ranging from contact area calculation to plane fitting and motion axis generation, ensuring that each parameter has physical authenticity.

With PhysXNet as a solid "textbook," the next step is to teach AI how to generate 3D models with physical properties. The PhysXGen generation framework adopts a strategy of "grafting" and "integration." It builds upon existing excellent geometric generation models, adding a dedicated "physical brain" module to understand and generate physical properties.

The dual-branch architecture of PhysXGen is extremely sophisticated. The structural branch inherits the geometric generation capabilities of pre-trained models, responsible for creating high-quality shapes and texture appearances—that is the "skin" of the object. The physical branch is a new module, specifically learning and generating the corresponding five physical properties—that is the "soul" of the object. Both branches achieve deep integration through latent space alignment, allowing AI to gradually learn the internal connections between geometric features and physical properties.

The experimental results are encouraging. In a comprehensive comparison with traditional "geometry-first then GPT" methods, PhysXGen achieved a decisive victory. In terms of geometric appearance quality, the new system not only maintained the advantages of the pre-trained model but even improved further. In terms of physical property prediction accuracy, PhysXGen surpassed baseline methods across all five core dimensions, with material and affordance prediction errors reduced by 64% and 72%, respectively.

Qualitative comparisons more intuitively demonstrate the advantages of PhysXGen. For a faucet model, traditional methods might even get basic rotational movement wrong, while PhysXGen can accurately generate the rotational joint and correct parent-child component relationships. For an office chair, the new system can precisely predict sponge and fabric materials, as well as the rotational motion characteristics of the backrest.

The significance of the PhysX-3D project goes beyond technology itself. It points the entire 3D content generation field toward a new direction—from purely focusing on the "skin" of geometric modeling to achieving "soul" and "skin" together in physically grounded modeling. This transformation will profoundly impact the development of many fields, including robotics, autonomous driving, and virtual reality.

Of course, the path toward "physical AI" is still full of challenges. Issues such as the long-tail distribution of real-world object sizes, the precise definition of complex kinematic relationships, and the technological gap between virtual and real need to be further addressed. But PhysX-3D has already opened a door to a world of physical intelligence.

As this technology continues to mature, future AI will no longer be just a "dreamer" of the virtual world, but rather a powerful "builder" capable of truly understanding and creating 3D worlds that follow physical laws, transforming the boundaries of our perception of AI creativity.

Paper link: https://arxiv.org/pdf/2507.12465