Exciting news has emerged in the tech world: the open-source AI operating system NeuralOS, developed by a Chinese team, is now available! Designed with inspiration from the bold predictions of renowned scientist Karpathy about the future of graphical user interfaces (GUI), it is noteworthy that it can predict and simulate the Windows operating interface in real time, even accurately displaying each user's operational feedback.

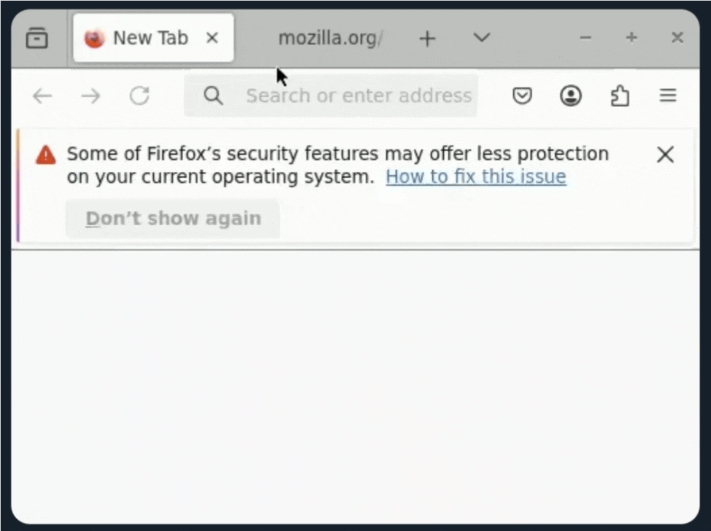

In NeuralOS, users just need to move the mouse, click icons, or type text, and the neural network will respond quickly, displaying effects almost identical to the familiar computer interface. This innovation realizes Karpathy's concept of "AI Era GUI" proposed earlier this year, as he believes that the future GUI will be a personalized, fluid, and interactive canvas.

The operation mechanism of NeuralOS is quite unique, relying on two core modules: a recurrent neural network (RNN) and a diffusion-based neural renderer (Renderer). The RNN is responsible for tracking changes in the computer's state in real time, ensuring the system is responsive and smooth, while the Renderer transforms user actions into specific visual representations on the screen, such as pop-up windows and icon changes.

To enable NeuralOS to learn efficiently, the development team used a large amount of operation videos, divided into two types: random interactions and real interactions. After careful training, NeuralOS can now accurately predict each user's action, but it still faces some challenges when handling fast keyboard input.

Currently, the NeuralOS team has provided an online experience version, allowing users to interact with the AI through simple operations and experience the charm of this emerging operating system in real time. Although the system is still being continuously optimized, it demonstrates that the future operating system will no longer be simply buttons and menus, but a new experience dynamically generated by AI.

With the code being open-sourced, the potential of NeuralOS will be further unleashed, and we look forward to its wide application in the field of human-computer interaction in the future!