With the rapid development of AI technology, large models have shown astonishing performance in image upscaling, making high-definition of low-resolution images no longer a challenge. However, in the field of video super-resolution (RealVSR), how to significantly improve clarity while maintaining frame-by-frame smoothness has always been a technical challenge. Recently, the DLoRAL framework, jointly developed by the Hong Kong Polytechnic University and OPPO Research Institute, has emerged, offering an open-source solution for video high-definition with its innovative dual LoRA architecture and efficient single-step generation capability, drawing widespread attention in the industry. Below, AIbase provides an exclusive interpretation of the highlights and potential of this breakthrough technology.

Project address: https://github.com/yjsunnn/DLoRAL

Innovative Dual LoRA Architecture, Balancing Temporal and Spatial Aspects

The DLoRAL (Dual LoRA Learning) framework is based on the pre-trained diffusion model (Stable Diffusion V2.1) and achieves a revolutionary breakthrough in video super-resolution through its unique dual LoRA architecture. The core lies in two specially designed LoRA modules:

CLoRA: Focuses on temporal consistency (Temporal Consistency) between video frames. By extracting temporal features from low-quality input videos, CLoRA ensures natural transitions between adjacent frames, avoiding common issues such as flickering or jumping in traditional methods.

DLoRA: Responsible for enhancing spatial details (Spatial Details). DLoRA improves high-frequency information, significantly enhancing the clarity and detail of the visuals, allowing low-resolution videos to display high-definition quality.

This dual LoRA design decouples the two goals of temporal consistency and spatial detail enhancement. By embedding lightweight modules into the pre-trained diffusion model, it reduces computational costs while improving generation quality.

Two-Stage Training Strategy, Achieving Efficiency and Quality

DLoRAL's training process adopts an innovative two-stage strategy, consisting of a consistency stage and an enhancement stage, alternating optimization to achieve optimal performance:

Consistency Stage: Optimizes temporal coherence between video frames through the CLoRA module and CrossFrame Retrieval (CFR) module, combined with a consistency-related loss function. This stage ensures that the generated video remains smooth even in dynamic scenes.

Enhancement Stage: Freezes the CLoRA and CFR modules, focusing on training DLoRA using techniques such as classifier score distillation (CSD) to further enhance high-frequency details, making the visuals sharper and clearer.

This alternating training approach allows DLoRAL to focus on optimizing different objectives, ultimately merging CLoRA and DLoRA into the frozen diffusion UNet during inference, achieving efficient and high-quality video output. Compared to traditional multi-step iterative super-resolution methods, DLoRAL's inference speed is about 10 times faster, demonstrating remarkable efficiency advantages.

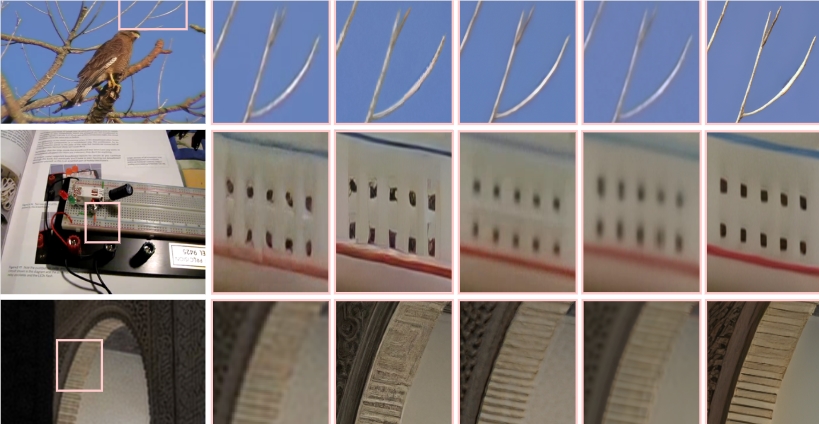

Open Source Empowerment, Benefiting Academia and Industry

The open source release of DLoRAL brings good news to academia and industry. Its code, training data, and pre-trained models were publicly available on GitHub on June 24, 2025. The project page also provides a detailed 2-minute explanatory video and rich visual demonstrations. DLoRAL not only surpasses existing RealVSR methods in visual quality but also demonstrates excellent performance in metrics such as PSNR and LPIPS. However, due to inheriting the 8x downsampled variational autoencoder (VAE) of Stable Diffusion, DLoRAL still has certain limitations in restoring very small text details. Future improvements are worth looking forward to.

The Future Direction of Video Super-Resolution