Tencent has recently announced the open-source release of its new language model "**Hunyuan-A13B**," which introduces an innovative "**dynamic reasoning method**," enabling it to intelligently switch between fast and deep "thinking" based on task complexity.

Key Highlights: Dynamic Reasoning and MoE Architecture

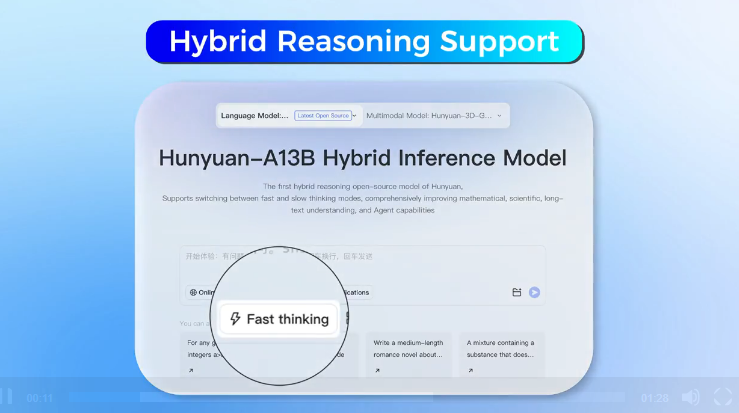

The core advantage of "**Hunyuan-A13B**" lies in its ability to **adjust the depth of reasoning in real-time**. For simple queries, the model uses a **fast mode**, responding quickly with minimal reasoning steps; for more complex questions, it can activate a **deep reasoning process involving multiple steps of thinking**. Users can also manually control this behavior using specific commands, such as "**/think**" to enable deep mode or "**/no_think**" to disable it.

The model employs a **Mixture of Experts (MoE) architecture**, with a total parameter count of **80 billion**, but only **13 billion parameters** are active during actual inference, which helps improve efficiency. Additionally, "**Hunyuan-A13B**" supports a context window of up to **256,000 tokens**, significantly enhancing its ability to handle long texts.

Training Data and Performance

According to Tencent's technical report, "**Hunyuan-A13B**" was trained on **20 trillion tokens** and optimized for reasoning tasks and broader use cases. To enhance the reliability of the model in scientific tasks, Tencent specifically collected **250 billion tokens** from the **STEM (Science, Technology, Engineering, and Mathematics)** field for training, including data such as math textbooks, exams, GitHub open-source code, logic puzzles, and scientific texts from **high school to university levels**.

Tencent claims that its "**Hunyuan-A13B-Instruct**" version is comparable to leading models like OpenAI, Deepseek, and Alibaba Qwen. The report states that in the **2024 American Invitational Mathematics Examination (AIME)**, "**Hunyuan-A13B**" achieved an accuracy rate of **87.3%**, outperforming OpenAI o1's 74.3%.