A recent study led by Nataliya Kosmyna and her team at the MIT Media Lab delves into the cognitive costs associated with using large language models (LLMs) like OpenAI's ChatGPT in tasks such as academic writing. The research finds that while LLMs bring convenience to individuals and businesses, their widespread use can lead to the accumulation of "cognitive debt," potentially weakening learning skills over time.

The study recruited 54 participants, dividing them into three groups: LLM group (using only ChatGPT), search engine group (using traditional search engines, prohibiting LLM use), and pure mental effort group (not using any tools). The study consisted of four sessions, during which, in the fourth session, participants in the LLM group were asked not to use any tools (referred to as "LLM to pure mental effort group"), while participants in the pure mental effort group began using LLMs (referred to as "pure mental effort to LLM group"). The research team recorded participants' brain activity through electroencephalography (EEG) to assess cognitive engagement and load, gaining insights into neural activation patterns during the academic writing task. Additionally, natural language processing (NLP) analysis was conducted, and participants were interviewed after each session. Human teachers and AI evaluators also scored the essays.

Key Findings: Weakened Brain Connectivity, Memory and Ownership Impairment

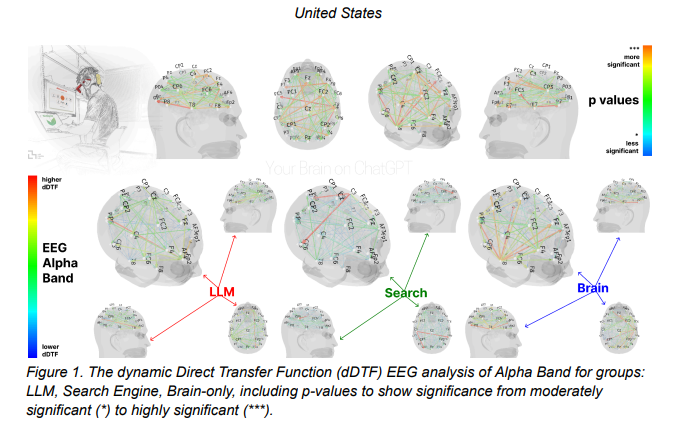

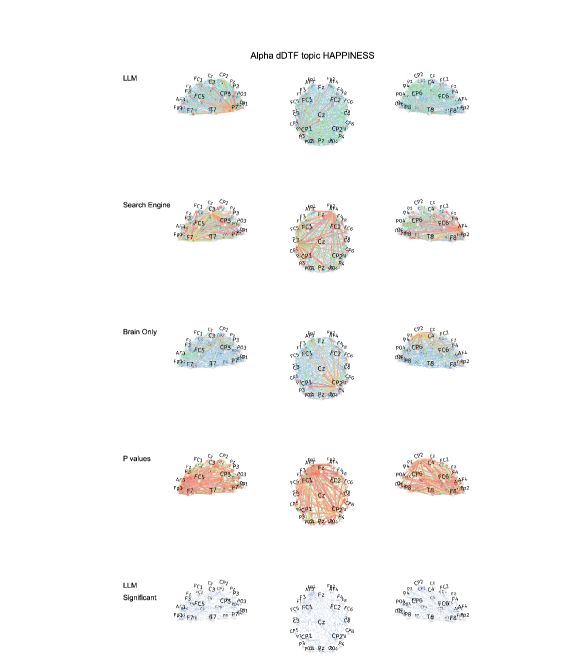

The study provides compelling evidence that there are significant differences in the neural network connectivity patterns among the pure mental effort, search engine, and LLM groups, reflecting different cognitive strategies. Brain connectivity decreases systematically with the degree of external support: the pure mental effort group showed the strongest and broadest network connectivity, the search engine group was in the middle, while LLM assistance resulted in the weakest overall coupling.

In particular, in the fourth session, participants in the "LLM to pure mental effort group" exhibited weaker neural connectivity and insufficient investment in alpha (alpha) and beta (beta) networks. Alpha wave connectivity is typically associated with internal attention, semantic processing, and creative ideation. Beta waves relate to active cognitive processing, focused attention, and sensory-motor integration. These results suggest that past users who have relied on LLMs show reduced neural activity in content planning and generation when detached from the tool, aligning with reports of cognitive offloading, where reliance on AI systems may lead to passive methods and a weakening of critical thinking abilities.

In terms of memory, participants in the LLM group showed clear obstacles in referencing their just-written papers, even failing to do so correctly. This directly maps to the lower low-frequency connectivity in the LLM group, especially in theta and alpha bands closely related to episodic memory consolidation and semantic encoding. This indicates that LLM users may bypass deep memory encoding processes, passively integrating tool-generated content without internalizing it into their memory networks.

Moreover, participants in the LLM group generally had lower perceptions of ownership of their papers, while those in the search engine group had stronger ownership feelings but still fell short of the pure mental effort group. These behavioral differences align with changes in neural connectivity patterns, highlighting the potential impact of LLM use on cognitive agency.

Cumulative Cognitive Debt: Balancing Efficiency and Deep Learning

The study points out that while LLMs provide significant efficiency advantages and reduce immediate cognitive loads initially, over time this convenience may come at the cost of deep learning outcomes. The report emphasizes the concept of "cognitive debt": repetitive reliance on external systems (such as LLMs) replaces the effortful cognitive processes needed for independent thinking, delaying mental investment in the short term but leading to a decline in critical inquiry abilities, greater susceptibility to manipulation, and reduced creativity in the long run.

Participants in the pure mental effort group, despite facing higher cognitive loads, demonstrated stronger memory, higher semantic accuracy, and a firmer sense of ownership of their work. In contrast, the "pure mental effort to LLM group" showed a significant increase in brain connectivity when first using AI-assisted rewriting of their papers, which may reflect the cognitive integration demands when incorporating AI suggestions with existing knowledge, suggesting that the timing of introducing AI tools could positively impact neural integration.

Far-reaching Impact on Educational Contexts and Future Outlook

The research team believes these findings have profound implications for education. Excessive reliance on AI tools may inadvertently hinder deep cognitive processing, knowledge retention, and genuine engagement with written materials. If users rely too heavily on AI tools, they may achieve surface-level fluency but fail to internalize knowledge or develop a sense of ownership.

The study recommends that educational interventions should consider combining AI tool assistance with "tool-free" learning phases to optimize both immediate skill transfer and long-term neural development. During the early stages of learning, comprehensive neural involvement is crucial for developing robust writing networks; in subsequent practice phases, selective AI support can reduce irrelevant cognitive loads, thereby improving efficiency without compromising established networks.

Researchers emphasize that as AI-generated content increasingly saturates datasets and the boundaries between human thought and generative AI become blurred, future research should prioritize collecting writing samples without LLM assistance to develop "fingerprint" representations capable of identifying individual author styles.

Ultimately, this study calls for careful consideration of the potential impacts of LLM integration into educational and information contexts, particularly regarding cognitive development, critical thinking, and intellectual independence. While LLMs can reduce friction in answering questions, this convenience comes at a cognitive cost, diminishing users' willingness to critically evaluate LLM outputs. This foreshadows the evolution of the "echo chamber" effect, where algorithmically curated content shapes how users encounter information.

(The title of the research paper is "Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task," with Nataliya Kosmyna of the MIT Media Lab as the main author.)