With the development of artificial intelligence, the application of large language models (LLMs) is becoming increasingly widespread. However, the current inference methods still have many limitations. Traditional autoregressive generation methods generate tokens one by one, which is inefficient and fails to fully utilize the parallel computing capabilities of modern hardware. To address this issue, a research team from Carnegie Mellon University (CMU) and NVIDIA has introduced a new generation model called Multiverse, designed to enable native parallel generation and fundamentally change our understanding of LLM inference.

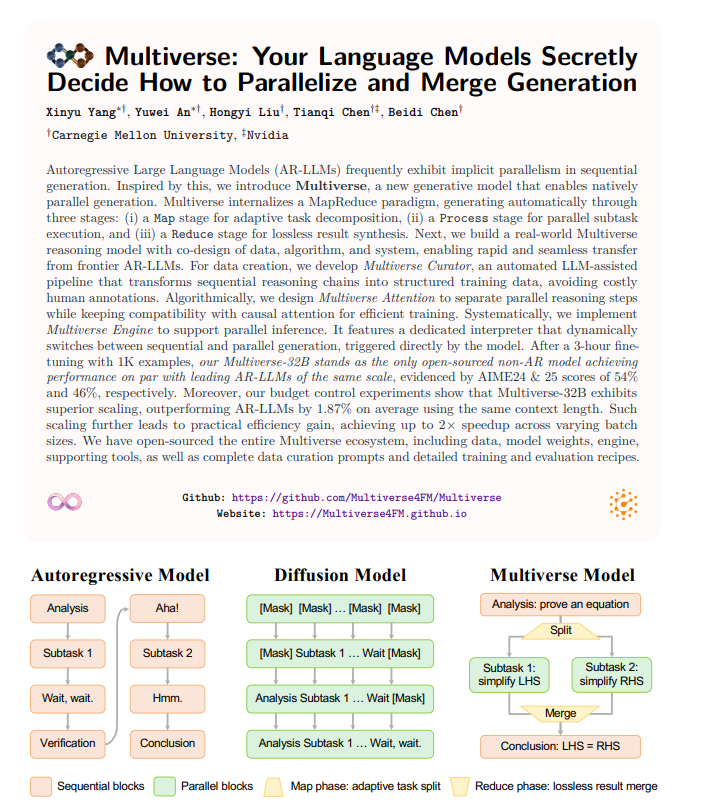

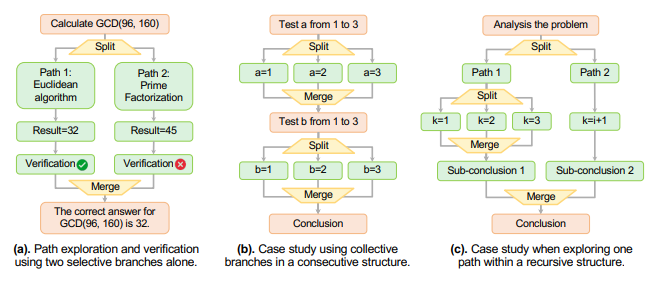

Multiverse is not just about speeding up the generation process; it also rethinks the architecture of the model. Researchers have found that current mainstream large language models inherently contain a form of parallelism during the generation process. Based on this discovery, the Multiverse framework adopts a structure similar to MapReduce, dividing the generation process into three stages: adaptive task decomposition, parallel execution of subtasks, and lossless result merging. This design can fully harness the potential of computational resources, enabling more efficient inference processes.

According to experimental data, the Multiverse-32B model demonstrates nearly a 2% performance improvement over autoregressive models under the same context length. This indicates that Multiverse not only significantly enhances speed but also excels in scalability, achieving up to two times faster speeds across different batch sizes. To make this achievement more widely applicable, the research team has open-sourced the entire Multiverse ecosystem, including datasets, model weights, and training details, allowing other researchers to further explore its potential.

In practical applications, Multiverse can flexibly adjust based on generation requirements and achieve dynamic switching between sequential and parallel generation through a specialized control tag, ensuring the coherence and logical consistency of generated content. The introduction of this technology undoubtedly injects new vitality into the field of natural language processing, and we look forward to seeing its performance in real-world applications.