Google has officially launched Gemini2.5Flash-Lite today, the lightest and most cost-effective AI model in its series. With the rapid development of technology, AI applications have penetrated multiple fields such as coding, translation, and reasoning. The release of the Gemini2.5 series marks a new breakthrough for Google in terms of inference speed and cost-effectiveness.

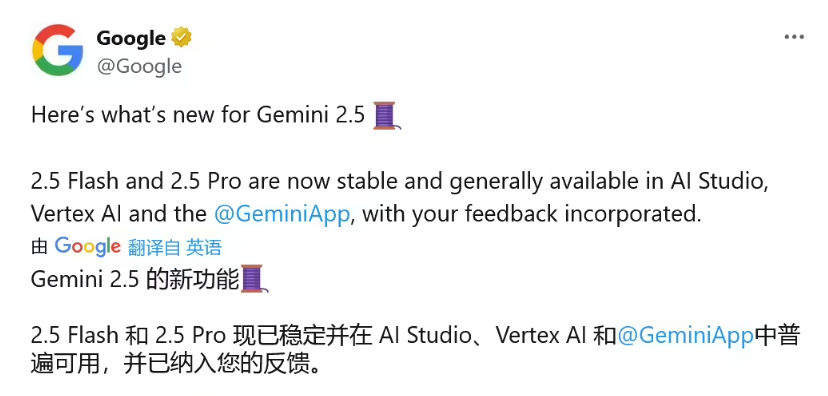

The Gemini2.5Flash and Flash-Lite models have undergone large-scale testing and are now entering a stable phase. This means developers can feel more confident about deploying them into production environments. Currently, many well-known companies such as Spline and Snap have already applied these two new models in actual projects and achieved good results.

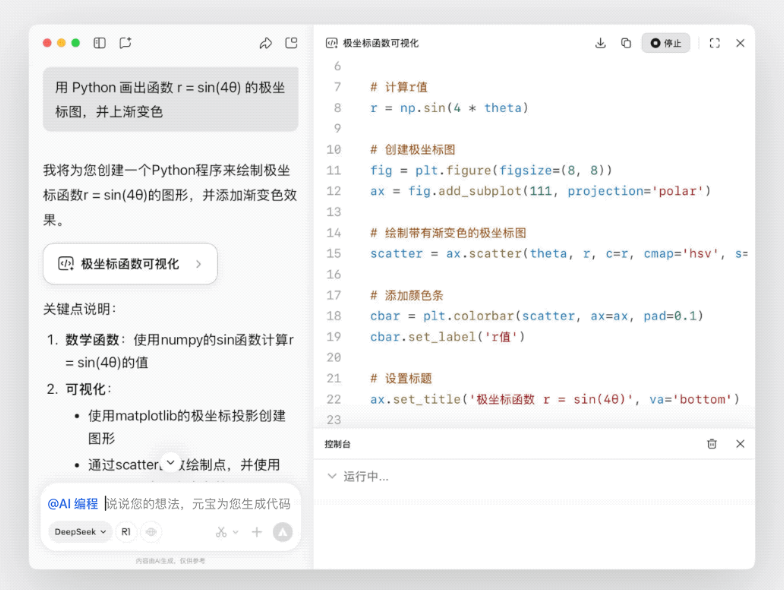

In this release, Google emphasized that the design philosophy of the Gemini2.5 series is to achieve the perfect balance of "cost, speed, and performance." Flash-Lite significantly improves inference speed, reduces latency, and is particularly suitable for real-time translation and high-throughput classification tasks. Compared with the previous version 2.0, Flash-Lite has shown significant improvements in comprehensive performance in areas such as coding, scientific computing, and multi-modal analysis.

This model not only retains the core capabilities of the Gemini2.5 series, such as flexible control over inference budgets, connecting external tools (such as Google Search and code execution), but also supports handling extremely long contexts, with a processing capability of up to 1 million tokens. This feature allows developers to be more proficient when building complex systems.

Developers can now access stable versions of Gemini2.5Flash and Pro, as well as a preview version of Flash-Lite through the Google AI Studio and Vertex AI platforms. In addition, the Gemini app end has integrated these two new models, and a customized version has been deployed in Google Search to improve user service efficiency.

In the rapidly advancing field of artificial intelligence, Gemini2.5Flash-Lite undoubtedly provides developers with more efficient and economical AI tools, laying a solid foundation for future AI applications.