Recently, Apple Inc. and a research team from Columbia University jointly developed an AI prototype system called SceneScout. This system is designed to provide street view navigation assistance for the blind and low vision (BLV) community, helping them with daily travel more effectively.

The SceneScout system combines Apple Maps API with a multimodal large language model (based on the GPT-4o core) to generate personalized environmental descriptions. This innovative technology allows users to receive more intuitive and specific navigation information, thereby enhancing their travel experience. The related research paper has been published on the preprint platform arXiv, although it has not yet undergone peer review.

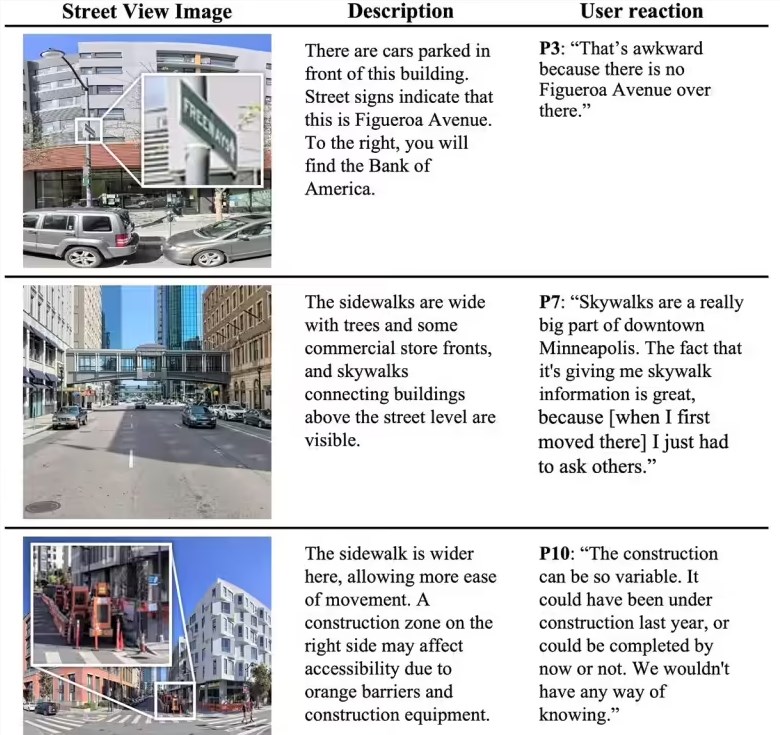

The core functions of the system include two main parts: first, Route Preview. Through this feature, users can predict the conditions of the road during their journey, such as the quality of sidewalks, characteristics of intersections, and the situation of nearby bus stops. This information is especially important for blind users, as it helps them understand their surroundings in advance when traveling.

Secondly, the Virtual Exploration feature. This function allows users to explore open scenes according to their needs. For example, users can ask the system "a quiet residential area near the park," and the system will provide corresponding directional guidance based on the user's request. SceneScout interprets visible content from the perspective of a pedestrian and generates structured text information, supporting short, medium, and long formats of output, which can be adapted to various screen readers, making it convenient for blind users to read.

In the testing phase, SceneScout recruited 10 visually impaired users for use, most of whom had backgrounds in the technology industry. Test results showed that 72% of the AI-generated descriptions were considered accurate. In virtual exploration mode, user feedback was very positive, with users stating that this feature could effectively replace traditional methods of information acquisition, bringing great convenience to their daily travel.

Key Points:

🌍 SceneScout, a system developed by Apple and Columbia University, provides street view navigation assistance for visually impaired users.

📊 This system combines Apple Maps API with a multimodal large language model to generate personalized environmental descriptions.

👥 Testing showed that 72% of AI-generated descriptions were accurate, and the virtual exploration feature received high praise from users.