Recently, VectorSpaceLab officially open-sourced the all-around multimodal model OmniGen2 on the Hugging Face platform. With an innovative dual-component architecture and strong visual processing capabilities, it provides researchers and developers with an efficient controllable generative AI foundation tool. This model is composed of a 3 billion parameter visual language model (VLM) called Qwen-VL-2.5 and a 4 billion parameter diffusion model. By freezing the VLM to parse visual signals and user instructions, and combining it with the diffusion model for high-quality image generation, it demonstrates leading performance in four core scenarios: visual understanding, text-to-image generation, instruction-guided image editing, and context generation.

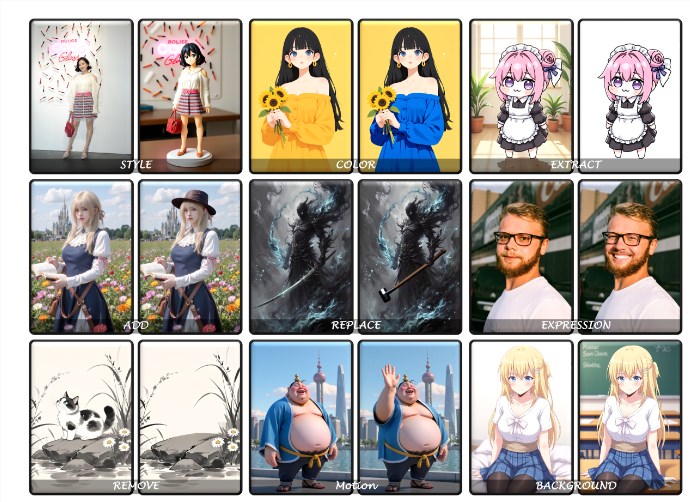

As an open-source project, OmniGen2's visual understanding capability inherits the strong foundation of Qwen-VL-2.5, allowing precise interpretation of image content; its text-to-image generation function supports generating high-fidelity, aesthetically pleasing images from text prompts; in the field of instruction-guided image editing, the model performs complex modification tasks with high precision, reaching the forefront level among open-source models; and its context generation capability can flexibly handle diverse inputs such as people, objects, and scenes, generating coherent and novel visual outputs.

For example, users can transform the cartoon scene of a panda holding a teacup into different styles through natural language instructions, add dynamic backgrounds to fantasy elf characters, or even correct details such as object count or color conflicts in images.

Currently, OmniGen2 has opened up model weight downloads and provides Gradio and Jupyter online demos, supporting users to optimize generation results by adjusting hyperparameters such as sampling steps, text guidance strength, and image reference weights. The project team plans to open source training code, datasets, and construction processes in the future, and launch a context generation benchmark test called OmniContext, further improving CPU load optimization and multi-framework integration. As the application scenarios of multimodal AI continue to expand, OmniGen2, with its resource efficiency and comprehensive functionality, is paving new technical paths for personalized visual creation and intelligent design assistance fields.