Recently, MiniMax announced the launch of its new model, MiniMax-M1, which is the world's first open-source large-scale hybrid architecture inference model. It has demonstrated outstanding performance in productivity-oriented complex scenarios and stands out among open-source models. MiniMax-M1 not only surpasses domestic closed-source models but also approaches the level of the most advanced overseas models while maintaining the highest cost-effectiveness in the industry.

A notable feature of MiniMax-M1 is its support for up to 1 million context inputs, comparable to Google Gemini2.5Pro, eight times that of DeepSeek R1, and capable of outputting up to 80,000 tokens of reasoning results. This achievement is due to MiniMax's proprietary hybrid architecture, primarily featuring lightning attention mechanisms, significantly improving efficiency when handling long context inputs and deep reasoning. For example, when performing deep reasoning with 80,000 tokens, MiniMax-M1 requires only about 30% of the computing power of DeepSeek R1, thus offering computational efficiency advantages during both training and reasoning processes.

In addition, MiniMax proposed a faster reinforcement learning algorithm called CISPO, which improves reinforcement learning efficiency by pruning importance sampling weights. In AIME experiments, the convergence performance of the CISPO algorithm was twice as fast as other reinforcement learning algorithms, including DAPO recently proposed by ByteDance, significantly outperforming GRPO algorithms used earlier by DeepSeek. These technological innovations made the reinforcement training process of MiniMax-M1 extremely efficient, completing it in just three weeks using 512 H800s, with rental costs significantly reduced, being one order of magnitude lower than expected.

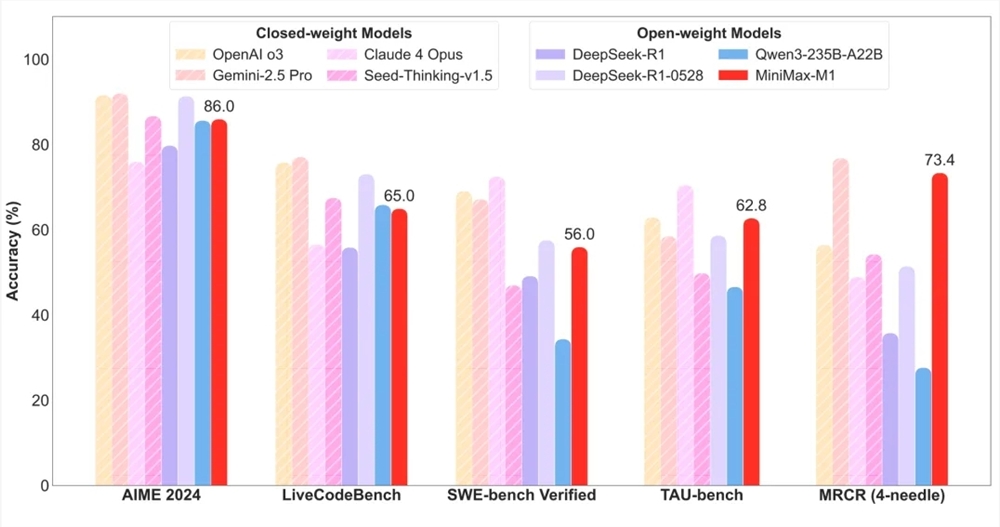

In terms of evaluation, MiniMax-M1 achieved excellent results on 17 mainstream evaluation sets in the industry. Especially in productivity-oriented complex scenarios such as software engineering, long context, and tool usage, MiniMax-M1 demonstrated significant advantages. For instance, on the SWE-bench validation benchmark, MiniMax-M1-40k and MiniMax-M1-80k scored 55.6% and 56.0%, respectively, slightly lower than DeepSeek-R1-0528’s 57.6%, but significantly outperformed other open-source weight models. Meanwhile, leveraging its million-level context window, MiniMax-M1 excelled in long context understanding tasks, surpassing all open-source weight models and even surpassing OpenAI o3 and Claude4Opus in some aspects, ranking second globally.

It is worth mentioning that MiniMax-M1 also leads all open-source weight models in the agent tool usage scenario (TAU-bench) and defeated Gemini-2.5Pro. Additionally, MiniMax-M1-80k consistently outperformed MiniMax-M1-40k in most benchmark tests, fully verifying the effectiveness of expanding computational resources during testing.

In terms of price, MiniMax-M1 maintains the lowest price in the industry. Users can use this model without limit for free on the MiniMax APP and Web and purchase API services at highly competitive prices through the official website. This initiative will undoubtedly further promote the popularization and application of MiniMax-M1 in the market.