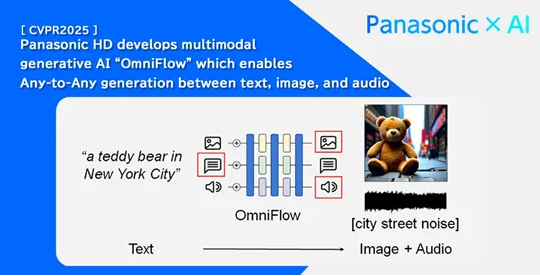

With the continuous advancement of artificial intelligence technology, multi-modal data processing has gradually become a hot topic. Recently, the global renowned electrical appliance brand Panasonic has launched its latest developed multi-modal large model — OmniFlow. This model can efficiently convert between multiple modalities such as text, images, and audio, enabling arbitrary-to-arbitrary generation tasks and bringing users a more flexible experience.

The design concept of OmniFlow is based on modularity, allowing the various components of the model to be independently pre-trained. This approach not only improves training efficiency but also avoids the problem of resource waste in traditional models during overall training. Specifically, the text processing module can be trained on massive text data to enhance its ability to understand and generate language; while the image generation module enhances the quality and accuracy of image generation through extensive training on image data.

In practical application, the various pre-trained components can be flexibly combined and fine-tuned according to specific needs. Such design enables users to quickly respond to new multi-modal generation tasks by making appropriate adjustments to relevant components without rebuilding the entire model, greatly saving computing resources.

Another significant feature of OmniFlow is its multi-modal guidance mechanism. Users can precisely control the interaction between input and output during the generation process by setting guidance parameters. For example, when performing text-to-image generation, users can emphasize certain elements in the image or adjust the overall style to achieve more expected generation results.

When handling inputs, OmniFlow converts multi-modal data into latent representations. Text inputs are converted into vector form to extract semantic information; images are processed using convolutional neural networks for feature extraction; audio inputs are processed by specialized algorithms to obtain suitable representations. These latent representations are then further processed through temporal embedding encoding and Omni-Transformer blocks to achieve effective fusion between modalities.

To verify the performance of OmniFlow, the research team conducted multiple experiments covering various types of multi-modal generation tasks. In the text-to-image generation experiment, multiple public benchmark datasets were used. The results showed that the images generated by OmniFlow performed excellently in terms of matching with the input text, significantly reducing the FID (Fréchet Inception Distance) metric. Additionally, the generated images demonstrated excellent semantic consistency, achieving high CLIP scores.

In the text-to-audio generation experiment, the quality of the audio generated by OmniFlow was also satisfactory, successfully converting input text into audio content that met expectations, clear and smooth, with no noticeable noise. The release of OmniFlow undoubtedly injected new momentum into the application prospects of multi-modal generation technology.

Key points:

🌟 OmniFlow is Panasonic's latest released multi-modal large model, capable of efficiently converting between text, images, and audio.

⚙️ The model adopts a modular design, allowing independent pre-training, improving training efficiency and resource utilization.

🎯 Introduces a multi-modal guidance mechanism, allowing users to precisely control the generation process to meet different needs.